A look at NDepend

Contents

Earlier this year, the developers of NDepend were kind enough to grant me free of charge a license for their tool. They invited me to try it and consider writing something about it.

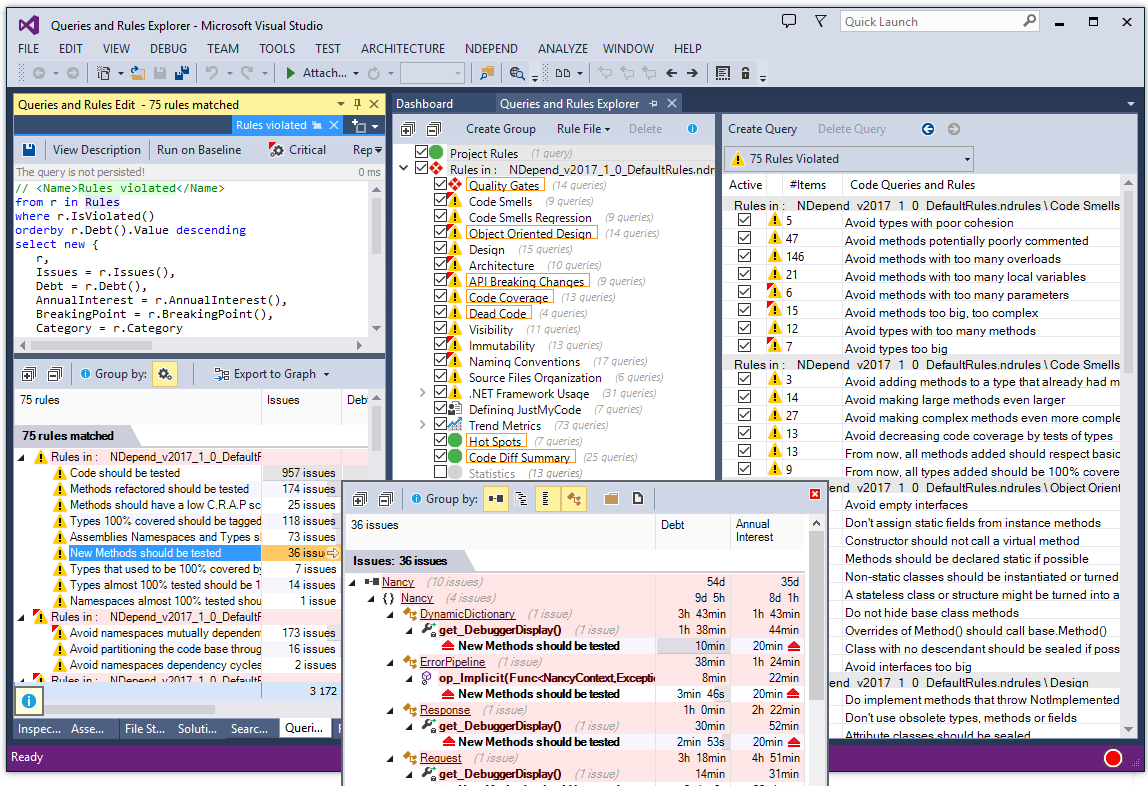

So this post will be a brief pause from the usual content of my blog, as we will be taking a quick look at some of the functionality of this software. I’ve also opted to include a few screenshots from the NDepend site, because I preferred them to the ones I took myself.

What is NDepend

The website of NDepend describes it as a technical debt estimation tool. “Technical debt” is a term usually used to describe the degree to which technical decisions made in the past (generally a result of a lack of time, experience, or decent tools) can adversely affect the maintainability and extensibility of a particular project.

Installation

I downloaded a ZIP archive from the website and the instructions I had received told me to extract it somewhere on my computer and run one of the executables in it, called Visual NDepend.

Visual NDepend has a window reminiscent of that of Visual Studio. In the start page, there is a button that offers to add NDepend as an extension to any of my existing Visual Studio installations. I’m told it can also integrate into Visual Studio Team Services, Jenkins, TeamCity, etc — but I don’t have any setup to test these. No extension seems to exist for VS Code right now, which I think is a bit of a waste, but understandable given how different its extension architecture must be from that of the full Visual Studio.

The installation went absolutely smoothly. I gave a quick glance to the other settings and I figured I’ll probably be tweaking them in the future, but for now I’ll stick to the defaults.

With all that said, I decided to open Visual Studio Community 2017 and try NDepend on some of my existing projects.

The dashboard

Through the newly added NDepend menu item in Visual Studio, I attached the extension to an opened solution and asked it to analyze my assemblies. After a while, it generated a fair amount of warnings and a couple of errors.

It also generated a comprehensive report. I imagine this being useful in creating presentations to communicate the progress being made in a codebase, especially when trying to demonstrate to non-technical people the value of investing resources in refactoring.

At the top there is a pretty important setting: baseline. This tells NDepend what to check your current codebase against. Although I’m not required to set a baseline, if I do, the tool can tell me how much I messed up or improved things compared to that, including whether any new issues have come up and if any of them have been fixed, whether the technical debt has increased or decreased, and so on. Certain metrics are fundamentally comparative in nature and so they don’t make sense without a baseline.

NDepend provides metrics such as the number of lines of code, the number of lines that are not my code, etc. There is an estimated development effort, for which I probably need to adjust a setting somewhere so it can accurately assess time in the future. There is also an indication of my code’s cyclomatic complexity, which is the complexity added by having multiple execution paths due to conditionals.

There are also some other common metrics such as the number of types, assemblies, namespaces, methods, fields, source files and “third-party elements”. Although not stated explicitly, that last item seems to be a sum of all types, assemblies, namespaces, methods and fields of all the dependencies of the solution. I’m not entirely sure why this is useful to know, but I guess it doesn’t hurt.

A couple of test cases

I tested the tool on a few projects. One was a work-in-progress compiler written carefully in the most object-oriented way possible. It got a B rating and failed quite a few rules. In my defense, those issues were mostly found in code generated by ANTLR.

Another project was written in a pure functional style: everything is immutable, parameters and return types are simple types (strings, lists, dictionaries, functions), and almost everything is basically an application of Where, Select, Aggregate, etc. This got an A rating from NDepend, which I found cool.

The technical debt estimation

In general, NDepend can give a percentage of the code that contributes to technical debt, as well as a rating and amount of time it would take to get this to an excellent A rating. There are a list of criteria that your assemblies are tested against:

- Quality gates, which are basically large-scale criteria, violation of which implies that the code is not ready for production.

- Rules, which are individual criteria. Some of them are considered critical, and passing all of them is one of the quality gates.

- Issues, which are specific points where your code fails to meet some rules.

The predefined rules

At the heart of NDepend are the over 100 rules that your code will be tested against. Each of those rules belongs to a category, such as “Code Smell”, “Design”, “Object Oriented Design”, “Architecture”, “API Breaking Changes”, “Visibility”, and so on. Many of those rules come from Microsoft guidelines, others are guidelines found in famous design books, some may be an expression of community consensus, and so on.

Many of the rules I agree with, such as “avoid interfaces too big”, “do not hide base class methods”, “avoid the singleton pattern”, “fields should be marked as readonly when possible”, “avoid static fields with a mutable field type”, “do not declare read only mutable reference types”, ”nested types should not be visible”, “avoid types with too many methods”, “declare types in namespaces”, “avoid non-readonly static fields”, and so on. There are others I don’t necessarily agree with, like “constructor should not call a virtual method”, “attribute classes should be sealed”, “enum storage should be Int32”, “avoid public methods not publicly visible”. And some I had never heard of before this tool, like “move P/Invokes to NativeMethods class” (which is pretty embarrassing for me, considering that most of my C# codebases happen to contain P/Invokes).

Each rule offers the rationale behind it. I like that, because the tool doesn’t push you into a cargo cult programmer mindset of blindly following best practices without knowing why. More importantly, though, all of the criteria described above seem to be fully customizable, so you can set your own quality standards and have NDepend enforce them (or at least warn you against violating them).

The overall metric of technical debt is actually computed through the individual rules, each one carrying custom logic. In our email exchange, the developer pointed out the singleton avoidance rule as an example of this: the contribution of a singleton to the overall technical debt intelligently scales linearly with the number of methods using the offending field, making the metric more meaningful than it might have been otherwise.

The rules editor

As I looked around the window explaining the rationale behind each particular rule, I saw the View Source Code button. Clicking on it opened up a small editor containing the code that checks that particular criterion. And it’s easily accessible and editable. The language used for this kind of scripting seems like some custom dialect of LINQ, named CQLINQ by its creators.

Of course, we’ve been able to write analysis passes for a while now using Roslyn, but I tend to be too lazy to do that. I must also say that I’ve never felt happy whenever I’ve had to use the .NET Reflection API — it felt like it required too much ceremony to achieve even simple results. The Mono.Cecil API was decidedly better overall, but when I last checked it out, it still left a few things to be desired from a usability perspective.

On the contrary, the API exposed by NDepend to CQLINQ seems to cut through the bullshit and dive directly into various interesting characteristics of the examined codebase. I can effortlessly filter out all third-party code, look for compiler-generated types, find all the methods that use or assign a particular field, check for immutability, and so on. The editor offers code syntax highlighting and auto-complete support with API hints, which helps immensely if you want to jump right in.

At the end of each query, you are expected to return an object that has all the information you want to communicate regarding your rule. The object can be of an anonymous type, and the results window will automatically pick up on its fields and generate the necessary columns to present your results. Each rule is compiled and run against your code as you type, showing you the results live, which should speed up the process of composing and testing them.

Other features

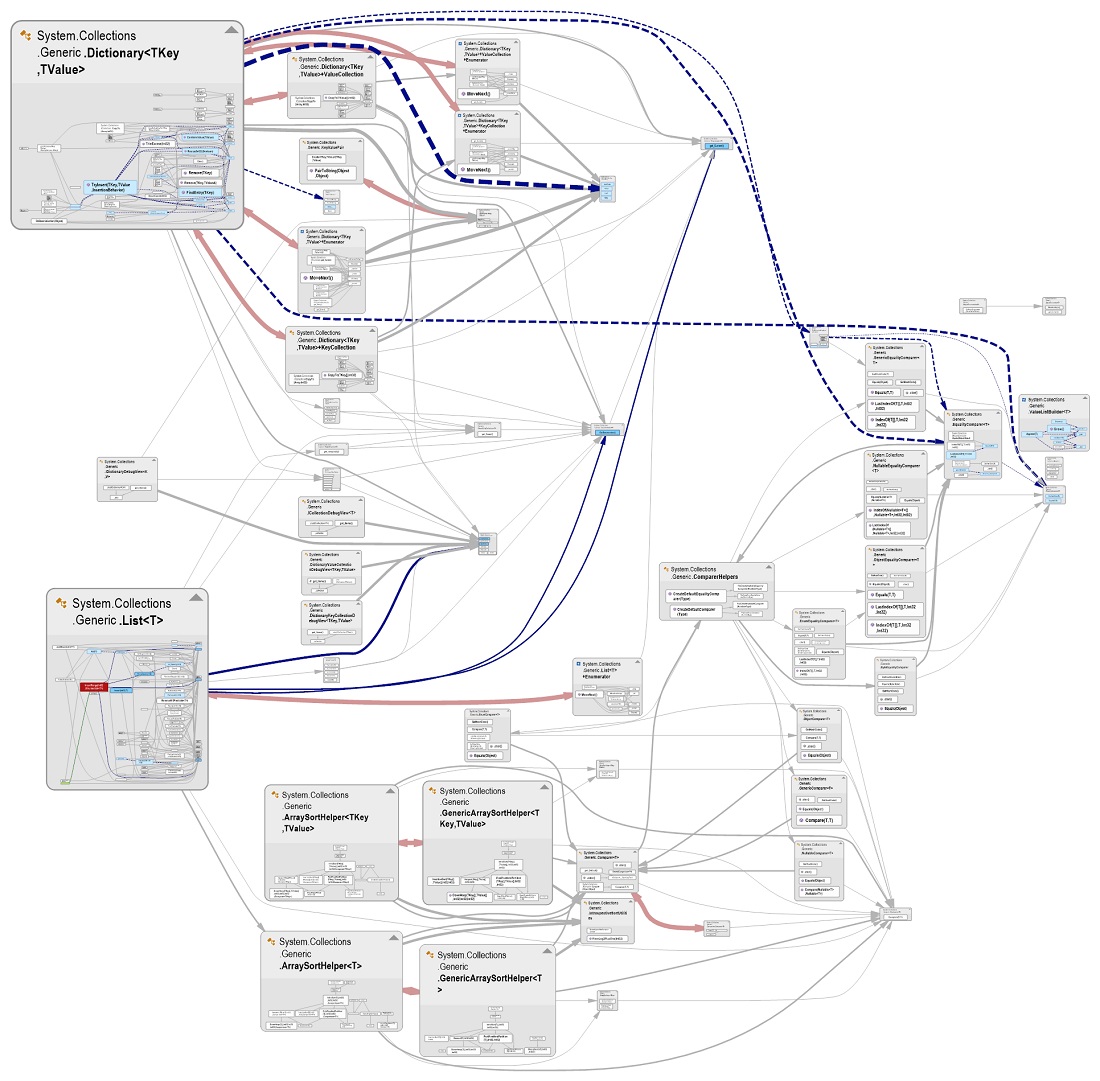

Another feature of NDepend is the dependency matrix. This is a view of all the projects in the solution on one axis and all the projects plus referenced libraries on the other axis, with a matrix representing the dependencies between each project. The view can further break down into lower-level parts.

At any point you can switch to another view, which shows (at your current level) a graph view of your dependencies. You can start view project dependencies, then step into them and view namespace dependencies, then step into them and view type dependencies, then step into them and view member dependencies. I found it somewhat counter-intuitive that this isn’t the default double-click behavior of each graph node — instead, I had to open the context menu at each level and dive in.

The dependency graph offers a choice of views — you can ask for fixed-size nodes, or size them based on cyclomatic complexity, coupling, and so on. Similarly, you can ask for constant-thickness edges, or have the thickness represent amount of namespaces, methods, etc.

There is also a code metrics view that’s basically a color view of your solution with respect to a metric of your choice. Again, there are such metrics as lines of code, cyclomatic complexity, and so on.

One that I found interesting is the method rank which (according to the documentation popping up helpfully by clicking the nearby button) is the result of applying the Google PageRank algorithm to a method, helping you notice methods in which bugs “will likely be more catastrophic”. I found the results of this metric quite surprising, in the sense that they were almost the opposite of what I would have expected. But maybe if I spend more time studying it, I might realize why.

Another feature worth mentioning is a plot with two axes named “abstractness” and “instability”. According to the documentation, an assembly is more “abstract” if it contains more abstract types — I have to say I’m not crazy about this particular criterion, I would have favored genericity of types and methods as a more meaningful metric of abstractness. Simiarly, an assembly is more “stable” if others’ dependencies on its types and members make it more of a pain to modify. Any assembly that’s not abstract but very stable is placed in the “zone of pain” of the diagram while an assembly that’s abstract and unstable is placed in the “zone of uselessness”.

Final notes

I feel I’ve only scratched the surface, but I don’t want to drag this on too much. There are a lot more features in NDepend, and a lot more depth in the features I did cover.

Overall, NDepend is a great tool for use with codebases that have high standards in code quality, for locating and assessing specific issues, and for communicating in concrete numbers the importance of tending to those issues.

Author Theodoros Chatzigiannakis

LastMod 2018-09-24